This week, we take a look at interpretability used on a Go-playing neural network, glitchy tokens and the opinions and actions of top AI labs and entrepreneurs.

Watch this week's MLAISU on YouTube or listen to it on Podcast.

Research updates

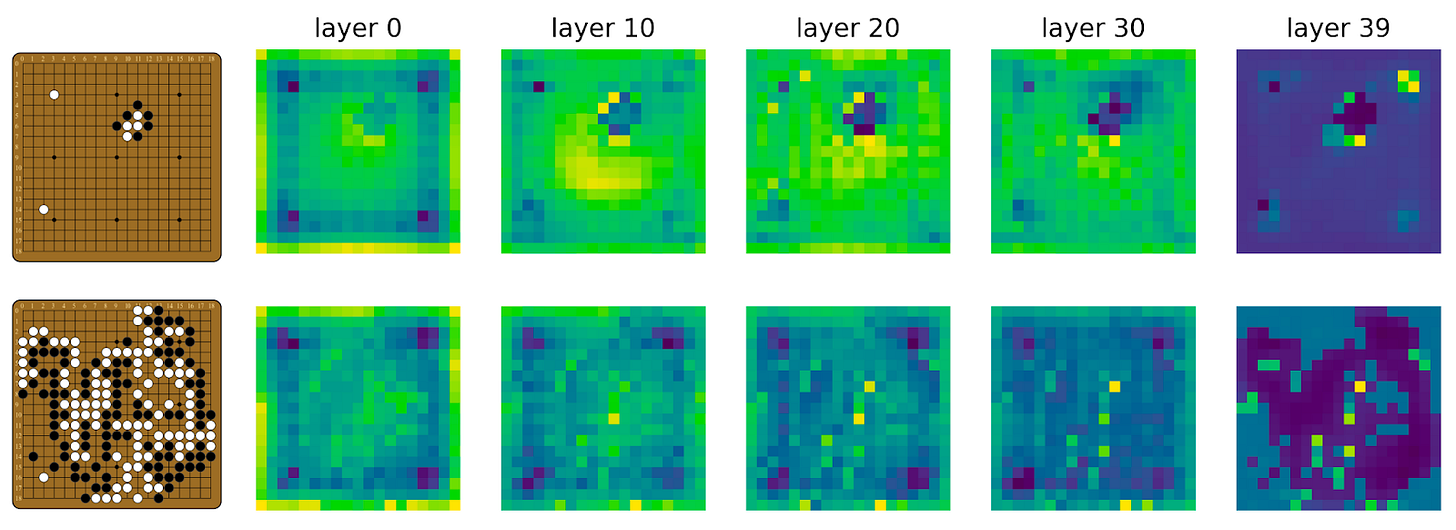

We'll start with the research-focused updates from the past couple of weeks. First off, Haoxing Du and others used interpretability methods to analyze how Leela Zero, a Go-playing neural network, reads the game board under specific conditions.

They specifically investigate ladders, simple scenarios that require one to understand how the game will develop many steps into the future to select one action or another. See an explanation here. Other Go-playing neural networks are unable to do this without external tools but Leela Go is significantly biased towards the right choice, indicating some type of understanding.

With a methodology similar to causal scrubbing and path patching, they find that information about board positions are generally represented in the same position throughout the network due to the residual stream.

The report includes multiple other findings: Global board move information is stored at the edges of the board, channel 166 provides information about the best moves, each of the 4 diagonal directions of ladders performs similarly but uses different mechanisms and the early channel 118 completely changes the ladder actions but sees activation even when no ladder is present.

Haoxing Du writes that she is pleasantly surprised at how easy it is to interpret such a large model and provides three further research steps to take in this type of research.

Another piece of work that has evolved over the past month is the SolidGoldMagikarp investigation, where Rumbelow and Watkins find ways to find the best prompt to get a specific output. As seen in the table below, getting the highest-probability completions sometimes requires very bizarre inputs.

From this, they developed a method to understand where clusters of tokens would exhibit similar behavior and find a specific cluster with very "weird" words such as " SolidGoldMagikarp" and " NitromeFan" that, in ChatGPT's mind, means "distribute" and "newcom" (and other things depending on the models), respectively.

There are many such examples that indicate that these models can be non-deterministic even at temperature 0 (a variable that if set to 0 makes the model completely deterministic), something that should not be possible.

I love this work because it shows how we can extract very weird and breakable properties of neural networks using the methods we have available today. Their follow-ups include more findings and a look at understanding why these specific tokens exhibit such weird behavior, such as SolidGoldMagikarp being a Reddit user who was part of a Reddit effort to count to infinity.

Another major news piece from the scientific community is the $20 million National Science Foundation grant that Dan Hendrycks has collaborated with Open Philanthropy to bring about. This is a huge step in institutionalizing AI safety.

Sam Altman's path to AGI

In less technical news, Sam Altman has published "Planning for AGI and Beyond", a piece detailing how he thinks about AI risk and safety. He emphasizes the importance of minimizing the risks and maximizing the benefits from artificial general intelligence (AGI) for humanity, democratizing the development and governance of AGI, and avoiding massive risks from this development.

The piece is generally good news for the safety of future neural networks and he says OpenAI will have external audits of their models before deployment along with the sharing of safety-relevant information between AGI companies. The post was published just a day after Sam Altman and Eliezer Yudkowsky were seen together in a selfie, though it was written before their meeting.

Additionally, one of the original rationalists, Robin Hanson, has released a re-iteration of his AI risk perspective, with arguments based on historical trends and transition periods in societal growth. He cites that he is still skeptical of the core arguments for AI safety due to their large basis in the uncertainty of what high-risk AI systems will look like.

The great people at Conjecture, the London-based AI safety startup, have also released their major strategy, focusing on "minimizing magic" in neural systems. By building systems that logically emulate existing cognitive systems' functionality (reminiscent of parts of brain-like AGI safety), they hope to have systems that we can understand better and which are more corrigible, i.e. we know how to update them after deployment in a safe way. The details are a bit unclear but this document presents the first steps in this direction.

Another for-profit AI safety organization that was just announced is Leap Labs, who will work on creating a "universal interpretability engine" that will be able to interpret any neural system. It was founded by one of the authors from the aforementioned SolidGoldMagikarp report.

Meanwhile, Elon Musk wants to join the AI race with a TruthfulGPT, a so-called "based AI". Throughout the years, he has often emphasized AI safety as paramount and even co-founded OpenAI with this principle. However, now Tesla makes robots that can build more of themselves and we'll see where his new AI startup goes.

Other research

In other news…

Researchers find that Trojan neural networks, networks updated to react in a bad way to a specific trigger prompt, work as good benchmarks for interpretability methods. They propose a benchmark based on highlighting and reconstructing trojan triggers, a bit like the SolidGoldMagikarp work.

Another team finds that they can "poison" (put bad data into) large-scale datasets with significantly negative performance results. They can poison 0.01% of the LAION-400M dataset for just $60 and propose two specific poisoning attacks.

Opportunities

This week, we see an array of varied and interesting opportunities as well:

Stanford's AI100 prize is for people to write essays about how AI will affect our lives, work, and society at large. The applications close at the end of this month: https://ais.pub/ai100

You can apply for a paid three-month fellowship with AI Safety Info to write answers and summaries for alignment questions and topics: https://ais.pub/stampy

The Future of Life Institute has open rolling applications for remote, full-time and interns: https://ais.pub/futurelife

Similarly, the Epoch team has an expression of interest to join their talented research team: https://ais.pub/epoch

You can apply for a postdoc / research scientist position in language model alignment at New York University with Sam Bowman and his team. https://ais.pub/nyu

Of course, you can join our AI governance hackathon at ais.pub/aigov.

Thank you for following along in this week's ML & AI Safety Update and we'll see you next week!